This post will be a little more technical than the usual ones. Nonetheless, I believe there ia "market" for it: students who are just learning quantum mechanics and might require to dispel much of the baloney that is told about the quantum weirdness. Recently I was discussing with one of the cobloggers of astronomers in the wild about some limerick from David Morin's mechanics book:

When walking, I know that my aimIs caused by the ghosts with my name.And although I don't seeWhere they walk next to me,I know they're all there, just the same.

This is mentioned just as some side note when discussing the stationary action principle, specifically, in the context of learning if there is a deep reason behind it. The stationary action principle says that the quantity S, called the action and given by

\[ S= \int_{t_a}^{t_b} dt\ L(x,\dot{x};t) \]

should take a stationary value. That means that from all possible paths involved in getting from a to b, a classical particle will take the path in which the action takes a minimum value (well, actually a stationary one, usually the minimum). This is the principle behind classical mechanics. The motion of everything we can see around us, including the stars in the sky or matter around a black hole follow from this principle.

Hence, it would be great to know if there is a reason behind this principle. Well, yes, there is. It is deeply rooted in quantum mechanics and its essence is captured in above's limerick.

So, the purpose of the few next posts will be to see how we can pass from the quantum description of the world to the kind of phenomena we observe everyday when dealing with baseballs, pulleys and all that.

This is not a trivial question, every experiment has confirmed the validity of quantum mechanics as the correct description of our world but it is in stark contrast with the intuition we have all developed from observing microscopical objects all our life. Nonetheless, at first glance, both descriptions are radically different. To see how weird the quantum behavior is for us, macroscopic beings, let's consider one situation that shows most of the quantum subtleties: the double slit experiment.

Consider some particles source S, placed at a point A. In front of it, we place a screen C with two slits in it. Hence, we expect that any particle arriving to a screen at the point B where the arrival of electrons is measured must pass through one of the slits. This configurations is shown below.

Well, if we do this experiment with marbles, baseballs, grains of sand or any other classical object coming out of the source S, then the outcome will be just what we expect. Namely, if we shut one of the slits, then the distribution of arriving marbles at B will be peak just in front of the open slit. The resulting distribution of arriving marbles at B when both slits are open is just a sum of the peaks produced by the particles entering through each slit. This is shown below.

Here, the outcome of the double slit experiment with classical particles is shown. If we shut one slit, we get one sharp distribution (shown in blue). The outcome with both slits open is just the sum of the two peaks and it is shown in red.

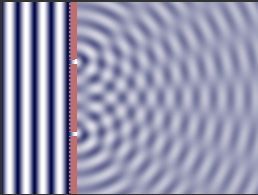

There is a second classical object that we can throw against our screen at C: waves. So, lets imagine that we place the whole setup in a pool and the source at A stirs the water a little bit so it create waves. In a realist experiment, we should place S very far from C so we can have some nice plane waves arriving at C, but ignore such nuances for our purposes. When the incoming wave arrives to C, each slit will act as a new wavefront and produce an outgoing wave. Since we have two slits, the outgoing waves will be out of phase from some parts and in phase for others. This is better seen in the picture below.

The darker zones correspond to places where destructive interference happens, namely where the waves are completely out of phase and cancel out. On the other hand, clear zones are where waves are in phase and constructive interference happens. The outcome of all this, is that the distribution at B will be different from the one we got using marbles as we now have some interference pattern, as shown below.

As you can see, we now have many peaks and actually the biggest one is in front of a closed part of the screen C ! This is just the result of the way waves behave: they can add or subtract from each other in stark contrast with marbles as we do not expect one marble to cancel another!

So, if an electron behaves exactly as a marble we expect that the chance of arrival at some point x of the target B will obey two simple rules:

- Each electron which passes from the source S to some point x in B should go through either hole 1 or 2.

- The chance of arrival to x is the sum of two parts: P1 the chance of arrival coming through hole 1, plus P2; the chance of arriving after coming through hole 2.

I repeat, this is the case for classical particles. Even in classical mechanics we can get a different behavior by using waves, as illustrated above. Now, we use to imagine electrons as lil' charged things and with a big degree of naivety we would expect these two rules to extend to electrons.

Well, what happens when we use electrons? It turns out that we get a distribution identical to the one we get from waves! This is a good place to stop today, but nonetheless I should tease you a little.

The questions that arise immediately are: is the electron a wave? If so, how can we reconcile that with our intuition that it should pass through one hole? how can we measure that? As of now, we could actually say that the electron is indeed a wave. Nonetheless, that contradicts our experience of electric charge as a flow of electrons, which has proved to be extremely successful. Additionally, when using electrons to strip electrons from some metal the behavior is consistent with particles (its analog with photons is the well known photoelectric effect). Actually, all the subsequent discussion for the quantum case can be carried as well with photons. This kind of behavior with electrons behaving as confused teens not making their mind about being particles or waves is usually stated as the wave/particle duality. Hopefully, by the end of this series of posts you will agree with me that such duality is a rather childish way to describe things: electrons are particles, which get their peculiar behavior due to the way probabilities/amplitudes add. Furthermore, the mechanism to get the amplitude will lead us directly to the classical world with which we are all so familiar.

Besides, now that we can not use the two rules stated above, what rules should we use to calculate the distribution at B? This last question is essential: the big difference between the classical and quantum world is in the way we calculate probabilities. That is the topic for the next post. Meanwhile, you can read what happened when this experiment was performed in 1998 by E. Buks et al.

For all you able to read spanish, I decided to continue this "story" in my spanish website: sinédoque. This was due to the capabilities of blogger which were too meager at the time, specially regarding mathematical expressions. I have just implemented a new system on this blog so maybe some more "math heavy" posts will appear here in the future. Keep tuned.

For all you able to read spanish, I decided to continue this "story" in my spanish website: sinédoque. This was due to the capabilities of blogger which were too meager at the time, specially regarding mathematical expressions. I have just implemented a new system on this blog so maybe some more "math heavy" posts will appear here in the future. Keep tuned.